AWS Coffee-Script Web Stack - Part 1: The Development Stack

Part 1 of this series consists of building up the server components in a nice stack on top of ubuntu linux. By the end of this blog entry, you will have a basic personal website with the

twitter bootstrap look and feel. We will then expand on its capabilities and features as I write more posts on the series. Some of these posts will include: adding

connect-assets which allows you to include compiled coffee-script into the rendered templates, session management with

redis-server for persistent session state across server reboots using oAuth providers such as google, combining sessions with

socket.io for seamless authentication validation, and simple M(odel) V(iew) C(ontroller) structure with classical inheritance design.

The stack will consist of three main

NodeJS components: node modules, server code, and client code. It will run on two environments: Internet facing AWS running Ubuntu Server 12.04 and a development environment consisting of Ubuntu Desktop 13.04 with

JetBrains Webstorm 6 Integrated Development Environment (IDE). The code will be stored in a

git repository which we use to keep a history of all our changes.

Setup Development Environment

So, lets get going and setup our development environment. First,

download the latest Ubuntu Linux Desktop, currently 13.04. While you wait for the ISO, go ahead and start building the Virtual Machine instance. The following screens relate to my Virtual Host based on

VMWare Fusion 5.

Virtual Hardware

The minimum to get going smoothly is pretty lightweight compared to other environments such as

Microsoft's SharePoint 2013 which requires a good 24GB of ram for a single development environment. This virtual machine should have at least 2 vCPU and 2GB RAM. Depending on how big your project gets (how many files Webstorm needs to keep an eye on), you might need to bump up to 4GB of RAM

OS Volume

For OS volume, 20GB should suffice. If you plan on making upload projects, then provision more storage.

Virtual Machine Network Adapter

I prefer to keep the virtual machine as its own entity on the network. This clears up any issues related to NATing ports and gives our environment the most realistic production feel.

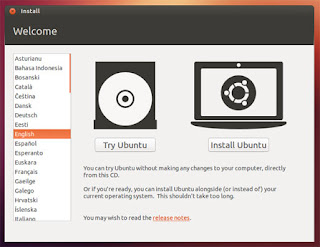

Install Ubuntu OS From Media

Boot up the virtual machine and Install ubuntu.

Update OS

I prefer to keep my linux machines patched.

$ sudo apt-get update

$ sudo apt-get upgrade

Install Dependencies

Before we can really get going with Webstorm, we need to get vmware-tools installed for all that full screen resolution glory. Once installed, we need to pick up g++.

$ sudo apt-get install g++

Now that we have our Ubuntu Linux Desktop 13.04 development environment setup, install nodejs, and also install our global node modules/binaries.

Install git, setup bare repository, use /opt for development

$ sudo apt-get install git

$ sudo mkdir /git

$ sudo mkdir /opt

$ sudo chown <username> /opt

$ sudo chown <username> /git

$ mkdir /git/myFirstWebServer.git

$ cd /git/myFirstWebServer.git

$ git --bare init

Install Node v0.10.15 (latest)

$ wget http://nodejs.org/dist/v0.10.15/node-v0.10.15.tar.gz

$ tar zxvf node-v0.10.15.tar.gz

$ cd node-v0.10.15

$ ./configure

$ make

$ sudo make install

Install global node modules and their binaries

$ sudo npm install -g coffee-script express bower

Creating our basic Express Web Server

I prefer to keep all my custom code inside /opt, so we will setup our Express web server there. Set permissions as necessary. We will also initialize git at this time.

$ cd /opt

$ express -s myFirstWebserver

$ cd myFirstWebserver

$ git init

$ npm install

Great. Now that we have our basic Express Web Server, we can run it with 'node app.js' and visit it by going to

http://localhost:3000 using a web browser.

Next, we want to convert all of our assets to coffee. Use an existing js2coffee converter, such as

http://js2coffee.org. Convert and clean up your js files into coffee.

Your app.coffee code could look something like this:

Configuring bower and components

We will use bower to install client libraries. Its like npm, and helps streamline deployments. For our particular configuration, we choose the project folder's pubilc directory to store components since its already routed through express.

Configure bower (docs)

edit /home/<username>/.bowerrc:

Now that we have our basic rc setup for bower, we need to specify our client library dependencies in our project.

edit /opt/myFirstWebsite/component.json

Bower is completely setup and ready to install packages.

$ cd /opt/myFirstWebsite

$ bower install

Now that we have our client libraries, lets add references to them in the website's layout.

edit ./views/layout.jade:

Commit the changes and push to the code repository

Before we commit our changes, we need to setup a .gitignore file so we dont blast the code repository with stuff bower and npm deal with.

edit /opt/myFirstWebsite/.gitignore

Now that we have successfully created a starter bootstrap enabled website, its time to commit our changes and push them to our source repository. First, we will setup the repository origin.

$ cd /opt/myFirstWebsite

$ git remote add origin /git/myFirstWebsite.git

Next, we will commit the changes to origin:

$ git add .

$ git commit -a

$ git push -u origin master