MicroServer ESXi White(silver)Box Part1

I recently researched a dedicated home office

ESXi 5 server. The goal was to have the capacity to run 4-8 virtual machines. My implementation includes two dedicated windows server domain controllers, two linux servers for a continuously integrated development environment, and a dedicated storage appliance (running ubuntu linux) to host fast SSD and large spindle disk storage. This "datacenter in a box" was to have the capacity to add disks, passthrough PCIe devices, and have the potential to team with more (with a central NAS) for a complete "cloud" environment on the cheap. I wanted this datacenter in a box to simply "plug n' play". No dependency on keyboard/mouse or display. Everything would exist virtually via the network and thus power and ethernet are its only requirements. Ultimately this box would end up hidden somewhere in a closet or under a desk, out of sight. The greatest requirement was to be small, quiet and use as little power as possible.

After a bit of research on the concept of creating one of these home server whiteboxes (

http://ultimatewhitebox.com/), I found a nice blog entry called The Baby Dragon:

http://rootwyrm.us.to/2011/09/better-than-ever-its-the-babydragon-ii/, which detailed a fairly recent (as of 2011) configuration for a home office whitebox ESXi server. From there I compiled an order on newegg.

Part 1 of this series will detail the hardware procurement and installation. Later parts will discuss ESXi installation, maintenance and caveats.

Motherboard, Processor, and Ram

The most critical aspect in terms of ESXi 5 is to have as many enterprise features as possible for extendability. Virtualization technologies including hardware passthrough is one of the most important. With passthrough, we can pass PCI express or USB devices directly to a virtual machine. That means I could add a RAID card, drop in a few disks, and add it to a linux storage virtual machine, or add a TV tuner card to a Windows 7 Media Center Server.

One of the most popular server boards at newegg right now is from Supermicro, the

X9SCM-F-O. This MicroATX motherboard supports VT-d (via the Xeon processor), 32GB of RAM, and IPMI to name a few. Like the Baby Dragon II, I will be using the

Xeon 1230 processor. For ram, I chose

Kingston's 8GB Hynix RAM which worked out to be a great deal. I only bought 16GB so far, since I am far from maximizing use of that much RAM in my setup, and I am currently encountering overheating issues with the CPU's stock heatsink and fan which is preventing me from adding more VMs. Overheating was occurring when I was doing massive Windows Updates for two Server 2008 installs that were running on the fast SSD datastore. Apparently the SSD is so fast that the processor had a hard time keeping up without getting too hot.

I am not entirely sure I have a real overheating issue, because supermicro dumbs down the IPMI interface to the temperature sensor on the CPU giving you OK, WARN, and CRITICAL values instead of the actual temperature. Not very useful when you are trying to see if CRITICAL means its reached Intel's recommendation for maximum temperature or not. Currently, this is my biggest issue with the setup. When CRITICAL is reached, the motherboard beeps very annoyingly until you lessen its load. According to intel's specs, the maximum operating temperature is 69 degrees c, which I could be reaching with the stock heatsink / fan.

Chassis and Power Supply

Chassis

For chassis I chose the

Lian-Li v354A MicroATX Desktop Case. The case supports up to seven 3.5 inch drives and two 2.5 drives. The image to the left shows a 2TB spindle disk on top and a 240GB SSD on the bottom of the drive cage. The lower drive cage has been removed.

Power Supply

For power supply, I chose the

SeaSonic X650 Gold EPS 12V. It is modular and rarely runs its fan.

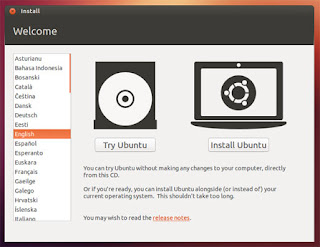

ESXi Installation

For my build, as well as for the Baby Dragon II, a USB thumb drive was used for the ESXi Operating System. Since the motherboard has an onboard USB port, it seemed entirely logical. Its slightly faster than a spindle disk and definitely takes up less space.

I used VMware fusion to install ESXi 5 on my thumb drive. VMware Player will work as well. Pass through the USB thumb drive to a new blank virtual machine. Do not worry about adding a harddrive to this VM, as you wont need it. Boot up the blank VM with the ESXi installer iso and follow the prompts, choosing the USB thumb drive as the install destination.

This image shows the thumb drive on the left side. In the middle are the 6 SATA ports (2 SATA3 and 4 SATA2). In the way of the shot is the lower drive cage.

Once the system boots up with the thumb drive, you can use the management console (via IPMI) to configure networking.

ESXi Updating without vCenter (or Update Manager)

Since I am doing the free thing, I will need to fill gaps that vCenter server would fill and patching is one of them. Thankfully, the process is quite easy. First, we need to know what updates are available.

SSH into your ESXi server and run the following command:

# esxcli software sources vib list -d http://hostupdate.vmware.com/software/VUM/PRODUCTION/main/vmw-depot-index.xml | grep Update

A list of updates (if you have any) will return. Pick up the latest cumulative update at the vmware website:

http://www.vmware.com/patchmgr/download.portal, and upload it to a datastore on the ESXi host.

# esxcli software vib update -d /vmfs/volumes/

datastore_name/

patch_location

In another console session, launch the following to keep an eye on the status:

# watch tail -n 50 /var/log/esxupdate.log

Issues with updating?

If you have any issues with installing the updates, and its related to "altbootbank", then you will need to "fix" a few partitions.

# dosfsck -v -r /dev/disks/partition_id

My disk partition id on the thumb drive that was bad is called "mpx.vmhba32:C0:T0:L0:5". To fix it, I said Y at the prompt when it notified me of errors.